I run the Competitive Programming Club at Sha Tin College. Here, we train talented Computer Science students to participate in the Hong Kong Olympiad in Informatics (HKOI), the largest competitive programming contest in Hong Kong. This year, we got 8 students into the final, 4 honourable mentions, and a silver medal!

HKOI (and all competitive programming contests) run on some sort of online platform, called an online judge, which accepts submissions from contestants, compiles and runs them, judging if the submission is correct. I decided to take the initiative to develop my own platform, so that we could run contests within our school to further improve our programming skills.

Security

Since the purpose of an online judge is literally to run user-submitted code and judge it, it is imperative that we sandbox the code submissions when running them, as otherwise the system could be exploited by arbitrary remote code execution (RCE).

My first instinct was to run all submissions in Docker containers. The plan was to build and run the code in an Alpine Linux image with GCC and Python installed, and then communicate the inputs and outputs between the judge process and the container to test if the user’s submission output the correct answer. I attempted to implement this using a Rust binding for Docker.

However, I quickly found out that there were some flaws with my approach. First of all, there wasn’t an easy way to efficiently communicate to the containers. The typical solution would be to use a network boundary by listening on a port or UNIX domain socket, or alternatively I could use standard streams (stdin and stdout) to write the inputs. This ended up causing many subtle bugs with writing data incorrectly. I also had to choose between spawning a long-running process inside the judge or using Docker’s exec API to spawn a new process for each submission, but both approaches required me to write a separate program to run inside the container. Moreover, I didn’t want to start a container every time someone submits, so I had to try and find a way to keep a “pool” of containers, which was also awkward and error-prone.

Because of these issues, I ended up ditching Docker containers altogether and decided to develop a more lightweight solution by relying on Linux security APIs instead. This included the seccomp (Secure Computing) API for disallowing certain dangerous syscalls, the landlock API for controlling access to the filesystem, and resource limits for controlling the amount of resources that submission programs can access. In the future, I also hope to add support for the namespaces and cgroups APIs, but I think that for now the current judge sandbox should be reasonably secure and fair.

As I did develop my own security solution, however, I am choosing to run the entire judge process in a container or VM just in case.

Judge backend

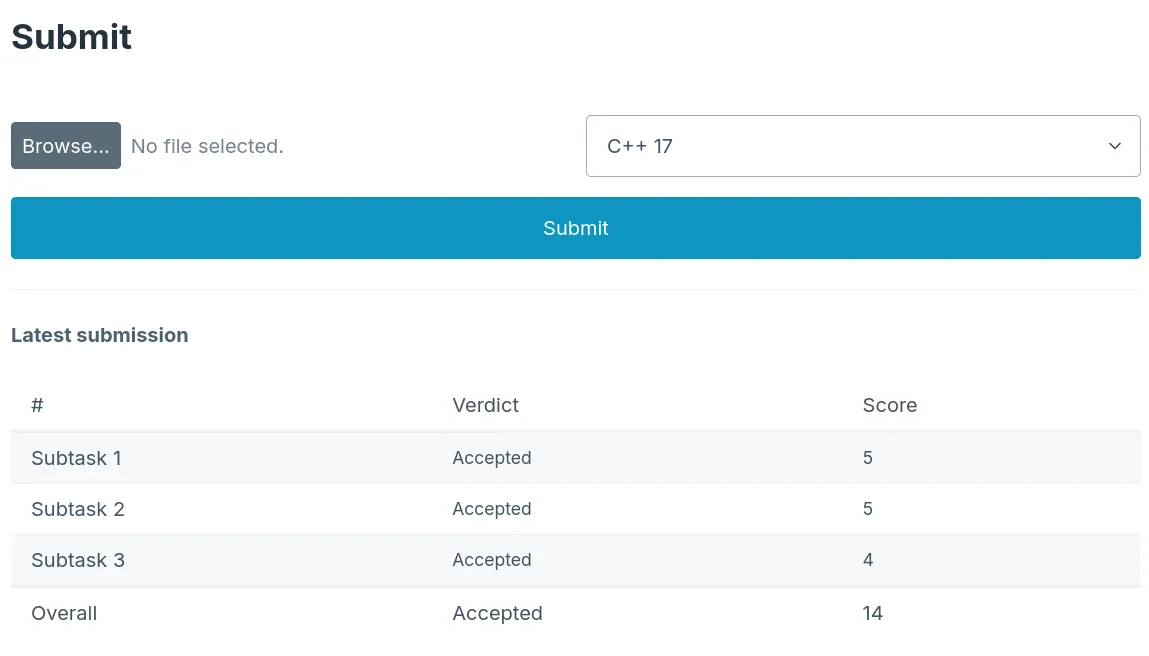

After I implemented the sandbox, I moved on to the actual judge backend. A simple judge backend is actually relatively simple; when the user submits, the backend takes the output of the program for each input and verifies that it is correct, awarding an appropriate amount of points.

Often, for harder contests, each task may be split into subtasks, which are smaller parts of the task that can award partial points even if the submission does not obtain a full score. There are multiple ways that the judge can award points for subtasks: HKOI uses a method where the highest score for each subtask across all submissions is counted, while another method could be counting the maximum total score from all submissions for each task. I have currently only implemented the former strategy, but it should be easy to add more in the future.

One other interesting thing about the judge’s executor is that it runs the tests in parallel. After compiling the user’s code, the judge will use a thread pool with rayon to execute as many tests in one go as possible, determined by the number of threads on the system. Instead of running one test at a time, my 16-core laptop can judge 16 tests at the same time, greatly speeding up judging. This is possible because the resource usage measurements measure CPU seconds instead of real time, so (in theory) each submission is still judged fairly even if other submissions are being judged at the same time. In practice, the Linux namespace API could probably improve fairness in demanding scenarios.

This is especially important because submissions are required to finish within a time limit (usually one second), and a large part of the difficulty in competitive programming is finding a solution that can not only always produce the correct answer, but do so efficiently. If the user’s submission always exceeds the time limit and submissions are not run in parallel, than 100 tests could take up to 100 seconds! I also want to implement test skipping to save even more time when the user’s submission clearly will exceed the time limit.

In order to judge tasks that may have partial solutions, I will add support for custom grader programs in the future, that will output the score that a specific output should be awarded for each test. For example, some tasks might have tests that accept multiple solutions, or other tasks might give a 50% score to an inefficient partial solution while awarding full scores to optimal solutions.

User interface

For the user interface, I decided to choose a very minimal frontend stack that uses axum for routing, askama for templating, HTMX for client side interactivity, and Pico CSS for styling. I found this stack to be mostly pleasant to use and quite refreshing to use (which means, in other words, allowed me to avoid writing JavaScript). However, in the future, I do see the value in switching back to a traditional JS-based frontend, possibly with SvelteKit, as I have found that using HTMX does occasionally lead to slightly weird patterns or makes it hard to achieve a certain kind of UX, which JavaScript could do easily.

Next steps

First and foremost, I plan to use the online judge to host some internal contests at my school. This should hopefully help me weed out subtle bugs that I may have missed during development, as well as give the contestants a fun activity to participate in and improve their programming skills. After participating in the HKOI finals, I realized that there is a lot of talent in competitive programming in Hong Kong, and I hope that this kind of contest platform could be refined to help host more contests and activities for anyone to join.

I also mentioned in the sections above that I have a lot of features planned but not currently implemented. This is intentional, since I have learned from experience the value of getting an initial prototype out and testing it with users to find bugs and usability issues before making the project too complex, which risks even more bugs and weird interactions between features. Hopefully, after the first round of testing, I can implement those missing features and upgrade the contest platform.

To summarize, building my own online judge was a very fun project for me to do. It has also taught me a lot about security and containerization technology on Linux. The whole process took a few hours each day for a week and a half, and I hope to spend more time in the future continuing this project!

Also read about the technical aspects of this project or check out the source code!